Many people are looking into using multiple physical hosts to run their VMware Cloud Foundation (VCF) labs, especially after VCF 9 was released with increased demand for resources, but may be intimidated by requirement to have a managed network switch supporting VLAN trunking since VCF require many VLANs that have to be available across all the physical hosts. It can also be a great advantage to have at least 10 or 25 GbE since a lot of traffic will travel between the nested hosts. This post will show you how you can add 25 GbE between two physical hosts running nested VCF without requiring a network switch at all. Your nested environment can still reach the outside world through a VM acting as a router, like the Holorouter if you are using Holodeck.

If you need to use more than two physical hosts you need a managed switch supporting 802.1Q VLAN trunking as you can only directly connect two servers.

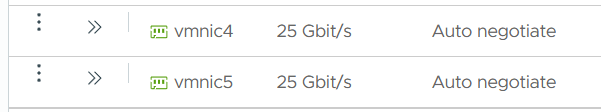

I use two Mellanox ConnectX-4 LX Dual Port 25 GbE NICs which is supported by both ESXi 8 and ESX 9, and to connect them togheter I use a pair of SFP28 to SFP28 25G DAC cables.

ESXi automatically detects the cards and link speed is auto negotiated to 25 Gbit/s.

To be able to use the NICs with nested VCF we must create a port group with VLAN Trunking enabled. I also use Forged transmits and MAC Learning for much better performance than promiscuous mode can deliver. There is no configuration required to be done on the NICs themselves.

Another thing that can simplify the setup is to skip using shared storage between the physical ESX hosts. You can deploy the nested ESX hosts on local storage, preferably NVMe devices. By creating two single host vSphere clusters with one ESX host in each and enabling the same EVC mode on both clusters you can still do live migration between the physical hosts to distribute load between them. DRS and vSphere HA will not be possible though. Inside your nested VCF environment everything will still be possible: vSAN ESA, DRS, HA, and so on.

I hope this post saved you some money and frustration.